PaCaR: Improved Buffered I/O Locality on NUMA Systems with Page Cache Replication

Jérôme Coquisart (RWTH), Julien Sopena (LIP6), Redha Gouicem (RWTH)

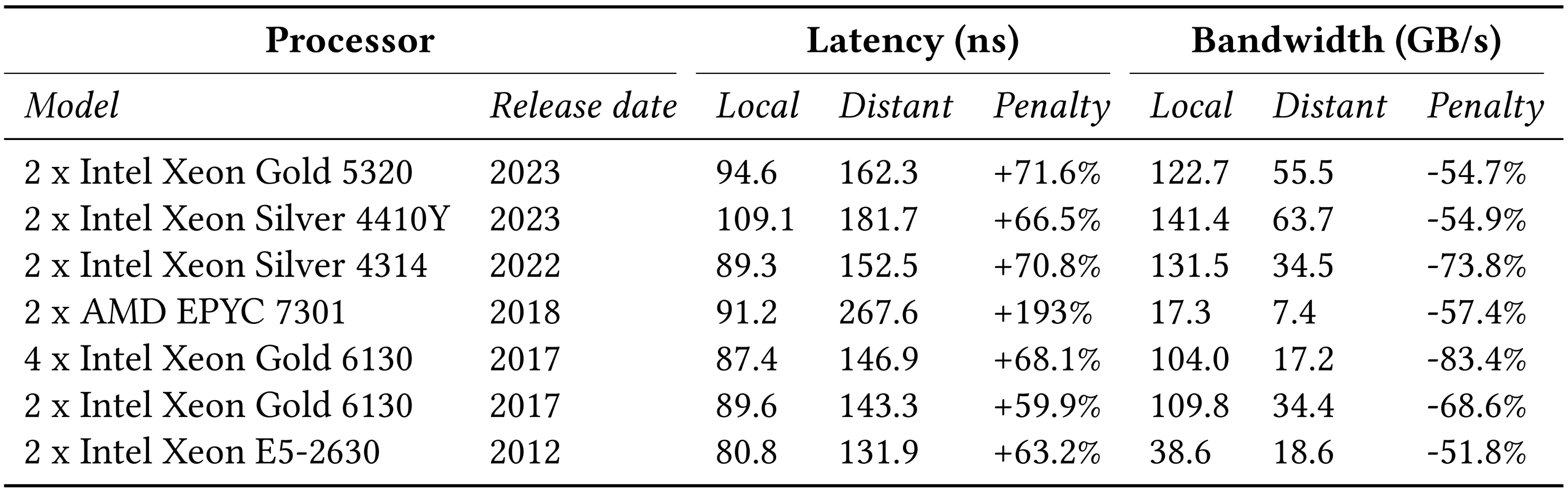

Performance and NUMA architectures

Multi socket hardware with Non-Uniform Memory Accesses are predominent in datacenters

Allows a greater number of cores but comes with a set of challenges:

- Latency increases across nodes

- Memory bandwidth decreases across nodes

Linux’s page cache

- The page cache is the cache for disk I/O.

- The cache is using the free memory of the system

- The cache is shared by all processes

- Pages are allocated with the first touch policy

- The pages are prime candidates for page reclamation

Performance implications

- Start RocksDB on node 0

- RocksDB does some IO

- System populates page cache

- Data is available on the page cache

- RocksDB is restarted on node 1

- The same data is accessed

We observe a 12% performance penalty because the page cache is not NUMA-aware

Fixing locality

- Manual solution: numactl (memory and cpu pinning)

- ❌ Doesn’t scale

- ❌ Doesn’t solve the problem for application spanning multiple nodes

- Automatic solutions: NUMA balancing migrate memory and/or threads to improve locality:

- ✅ Works with anonymous memory

- ✅ Works with mmap files

- ❌ Does not work with the page cache

- ❌ Doesn’t solve the problem for application spanning multiple nodes

How to improve locality for I/O intensive workloads on NUMA hardware?

PaCaR: Page Cache Replication

Page Cache Replication as a way to improve locality:

each NUMA node gets a copy of the page cache (referred to as twins)

Goals of PaCaR:

- Improve NUMA locality on read-mostly workloads (especially spanning multiple nodes)

- Transparency to the user and application

- Limit the memory consumption

- Ready to deploy at scale

- Minimal changes to existing stacks

Mechanisms:

- Replicating pages

- Managing consistency

- Minimizing worst-case performance

- Limiting memory footprint

Mechanism 1: Replicating pages

- On local read: Simply read the local page

- For distant read: Replicate the data locally

- The original page is called main

- The replicated page is called twin

- Subsequent I/O are now local no matter where the application runs

Mechanism 2: Managing consistency

Because the data is replicated, we need to guarantee consistent access to the page cache.

- Always write to the main page (never write directly on a twin)

- Invalidate all associated twins using a single flag switch

- Update invalidated pages when reading them: copy the data back from the main

- We can read the local page, the system remains consistent

Mechanism 3: Prevent distant writes

- Distant write are inevitable to ensure consistency

- If a node makes a lot of remote writes

- PaCaR detects it and triggers a switch main

- The main page becomes a twin, and the twins becomes the new main

- The writes is now local

Mechanism 4: Limiting memory footprint

- Replicate only pages that are accessed on a distant node:

- Not every page of the page cache gets replicated

- Use the existing Linux PFRA (Page Frame Reclamation Algorithm) to push out less used pages:

- Integrate with the existing Linux LRU algorithm

- Only the most used replicated pages are kept

Experimental setup

- Our test bench:

- 2 NUMA nodes: Intel(R) Xeon(R) Silver 4410Y

- 24 threads per node

- 256 GiB of total memory

- Our benchmarks:

- Microbenchmarks using fio

- Simulation using Filebench

- Real world benchmark with RocksDB

- Baseline: Linux Kernel LTS 6.12 with NUMA balancing

Simulation with filebench

- Performance gains up to 40%

- No noticeable overhead for other workloads

How PaCaR reacts to memory pressure?

- Switch main on eviction elects a new main upon eviction

- Pressure mitigation stops replication under memory pressure

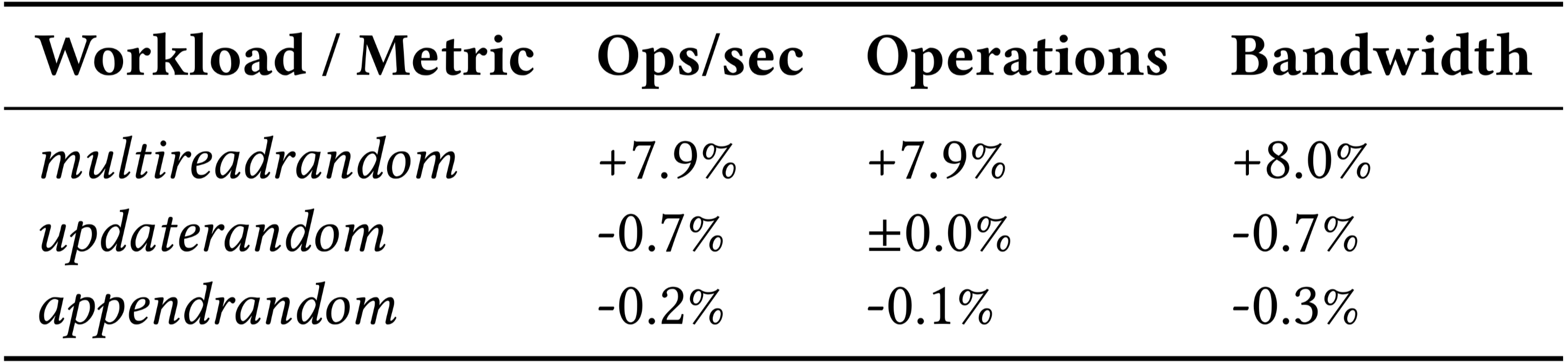

Real world application: RocksDB

- 8% gain in read performance

- Minimal impact on write-heavy workloads

Conclusion

PaCaR enables NUMA aware page cache:

- Dynamic page cache replication

- Consistent writes

- Integration with Linux’s memory managment

- In-kernel implementation for transparency to the users

Evaluation shows:

- Up to 40% improvements in filebench

- Up to 8% improvements in RocksDB

- Minimal overhead for write-intensive workloads

Future work:

- Support for mmap (ongoing project)

PaCaR overview